Social feedback experiment with real and virtual fish

Zebrafish in two-alternative forced choice swarm teleportation experiment

Zebrafish in two-alternative forced choice teleportation experiment

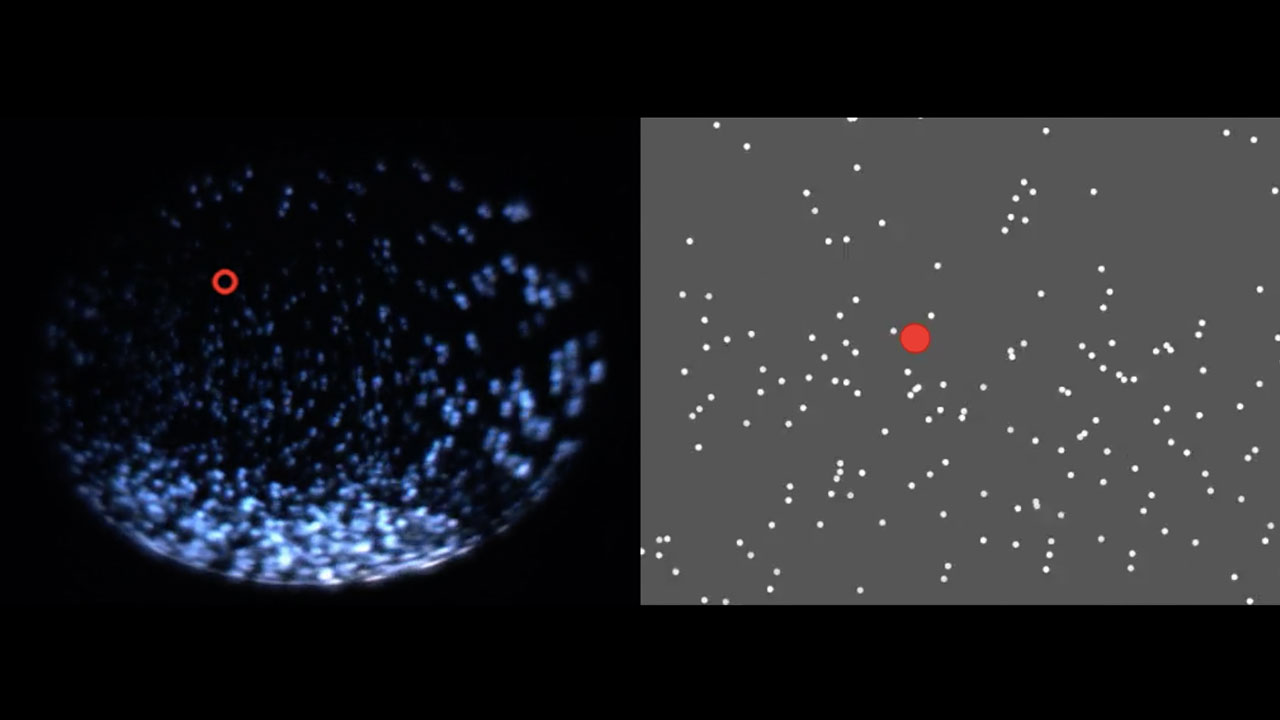

Zebrafish swims among a cloud of 3D dots

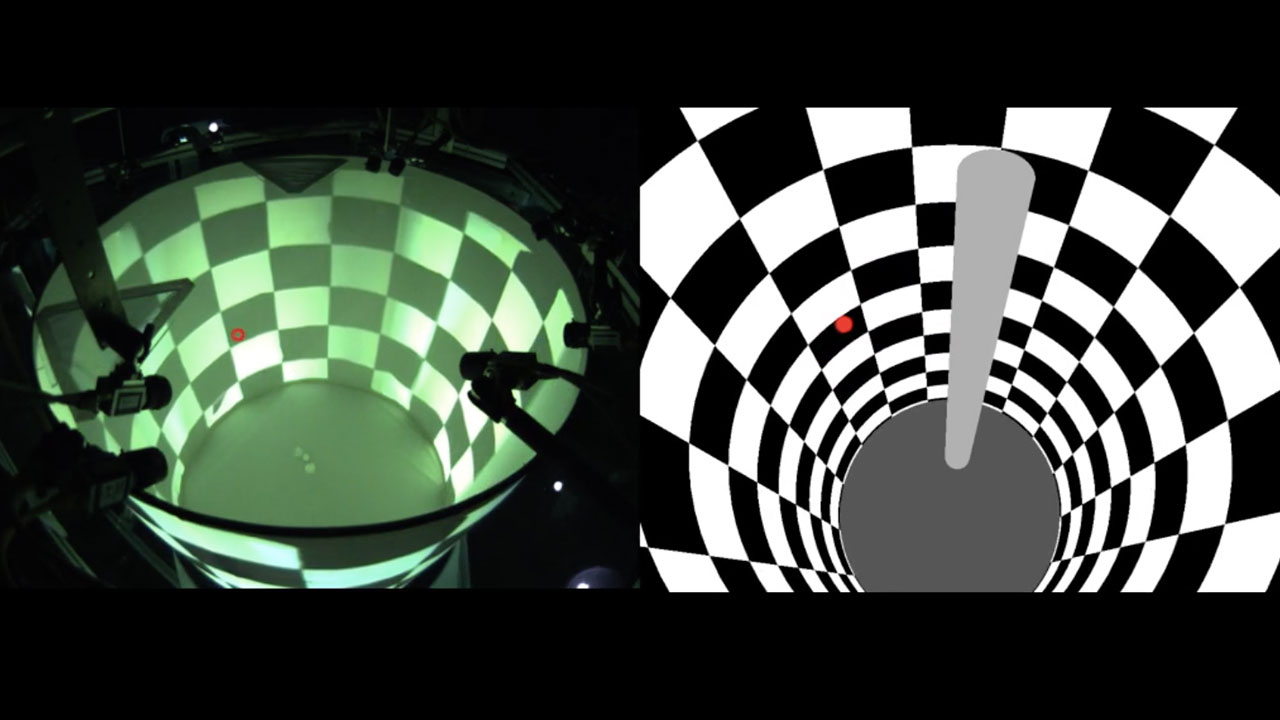

Remote control flies – controlling the behavior of Drosophila by exploiting the optomotor response

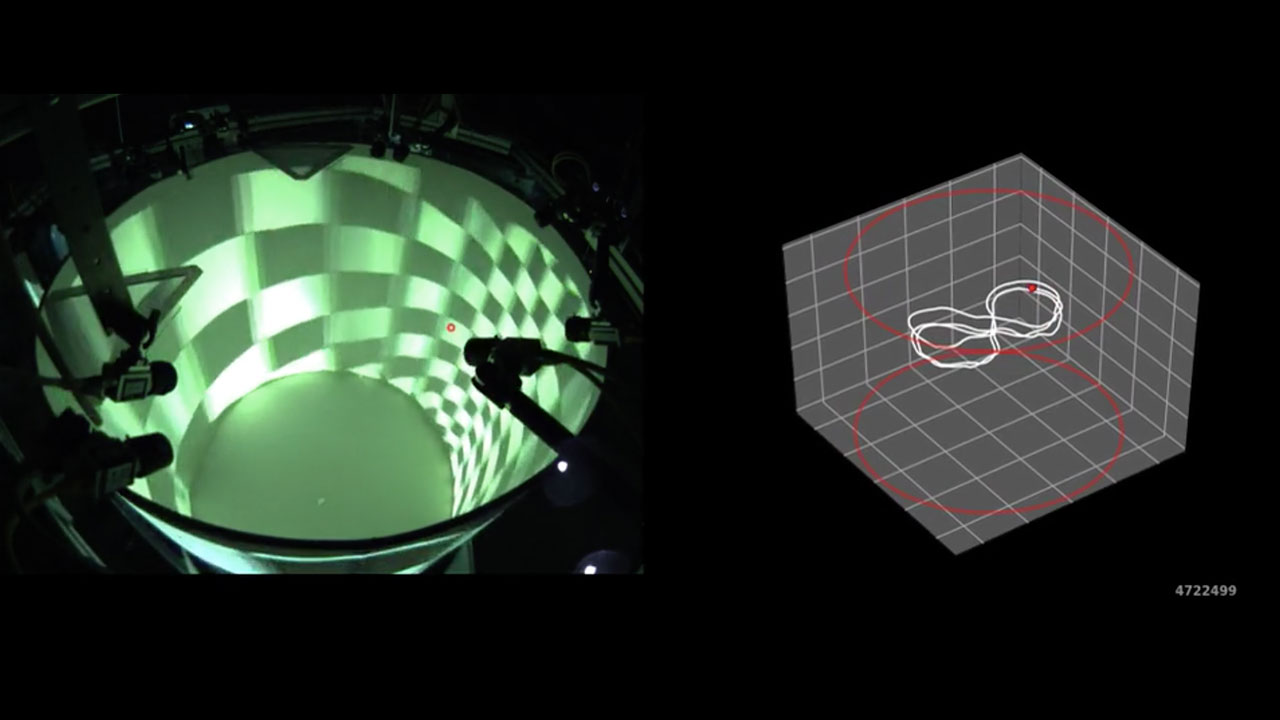

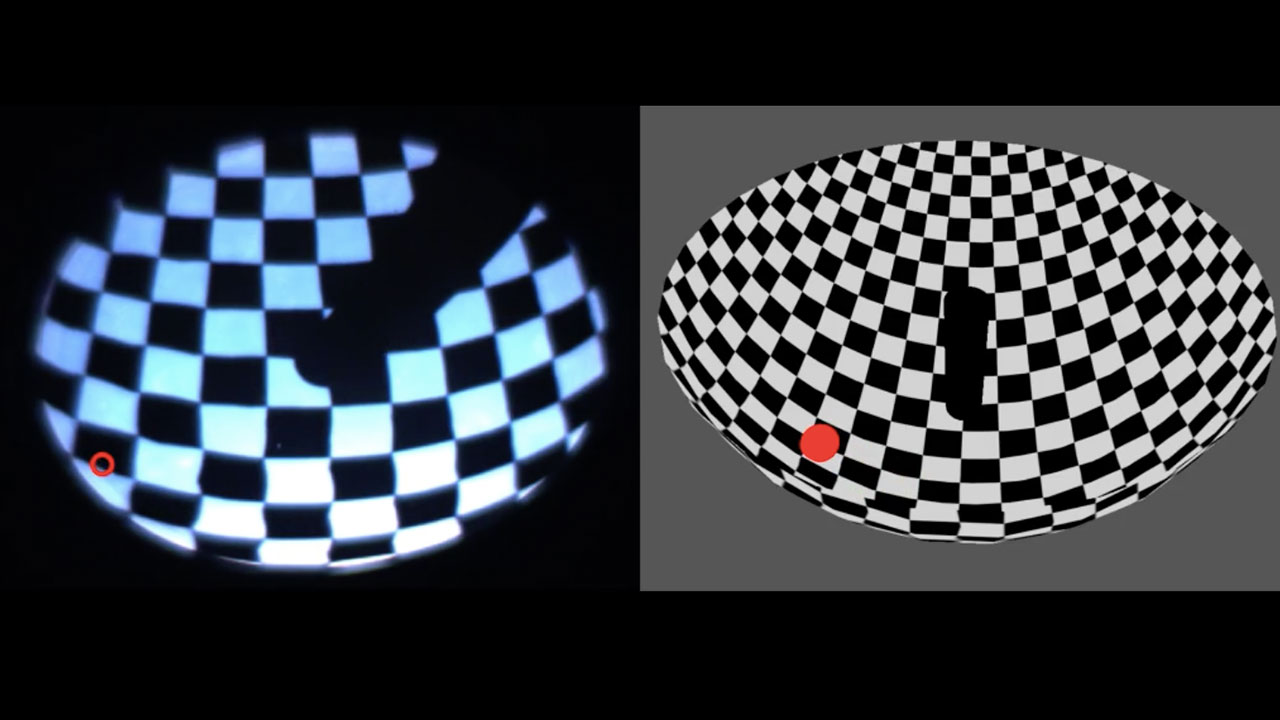

Mouse head tracking

A virtual elevated maze paradigm for freely moving mice

Simulation of a virtual post for freely swimming Zebrafish

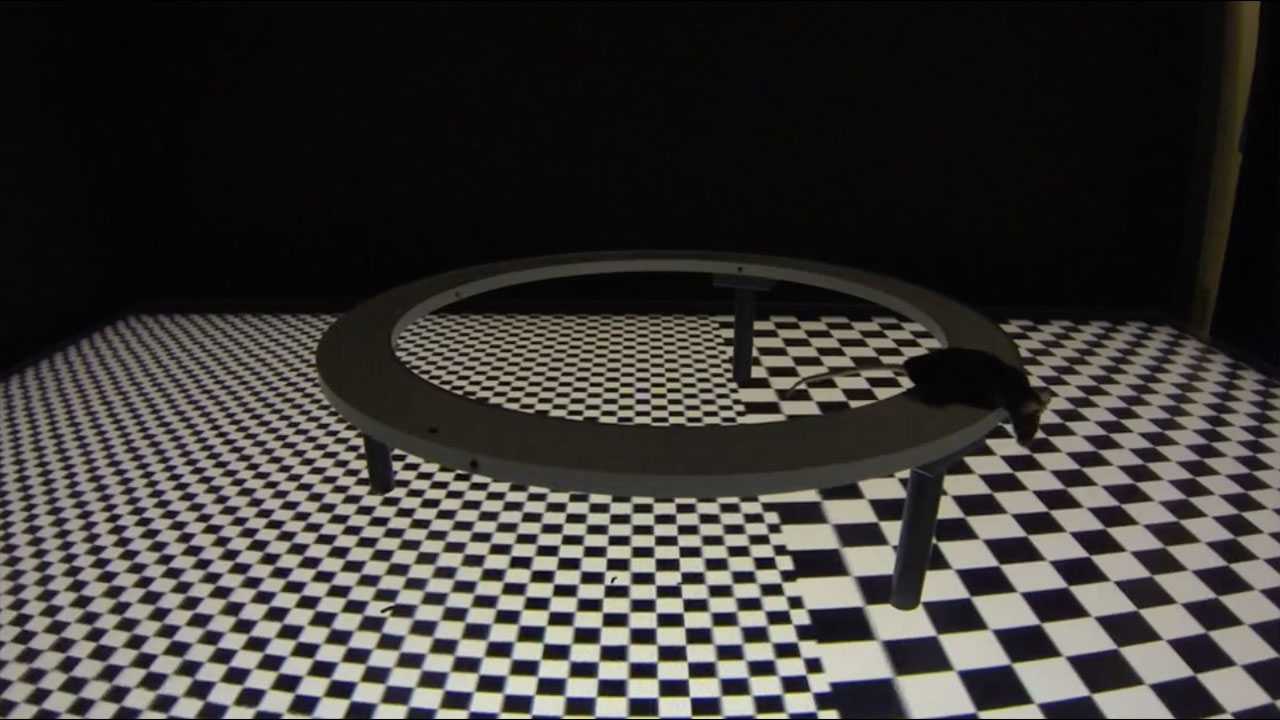

Interaction of a Drosophila with a real post

Simulation of a virtual post for freely flying Drosophila

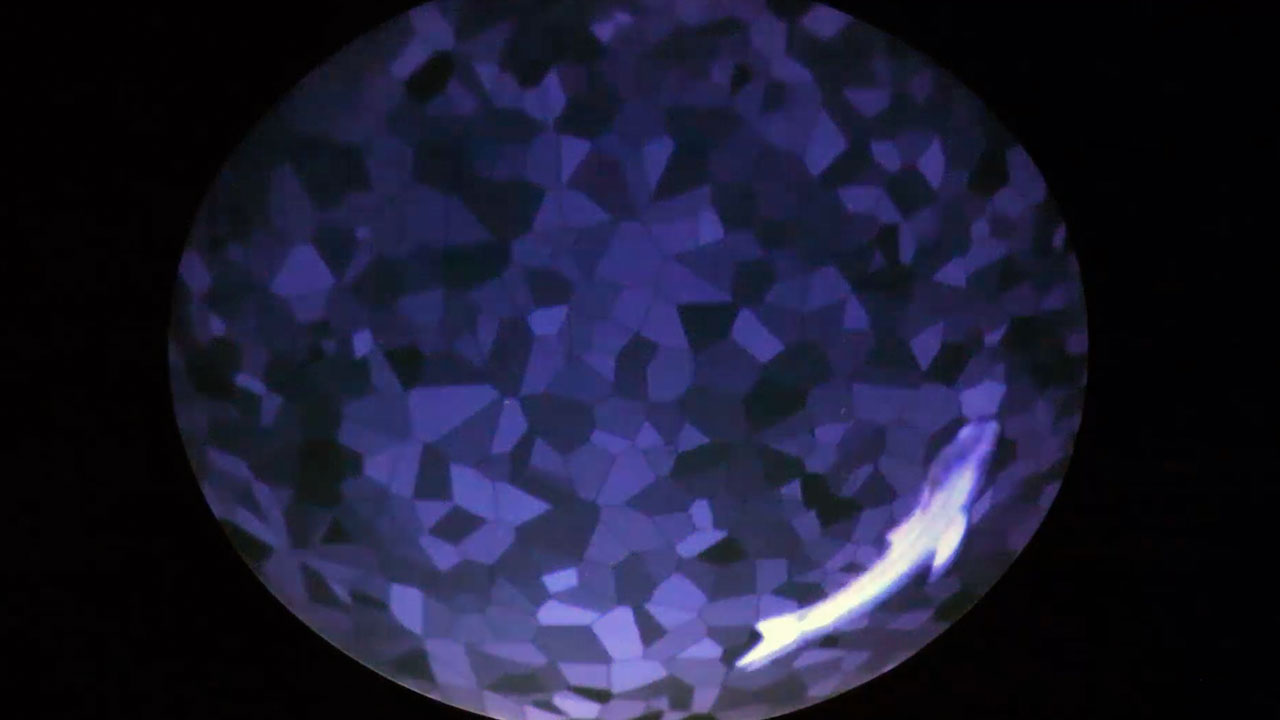

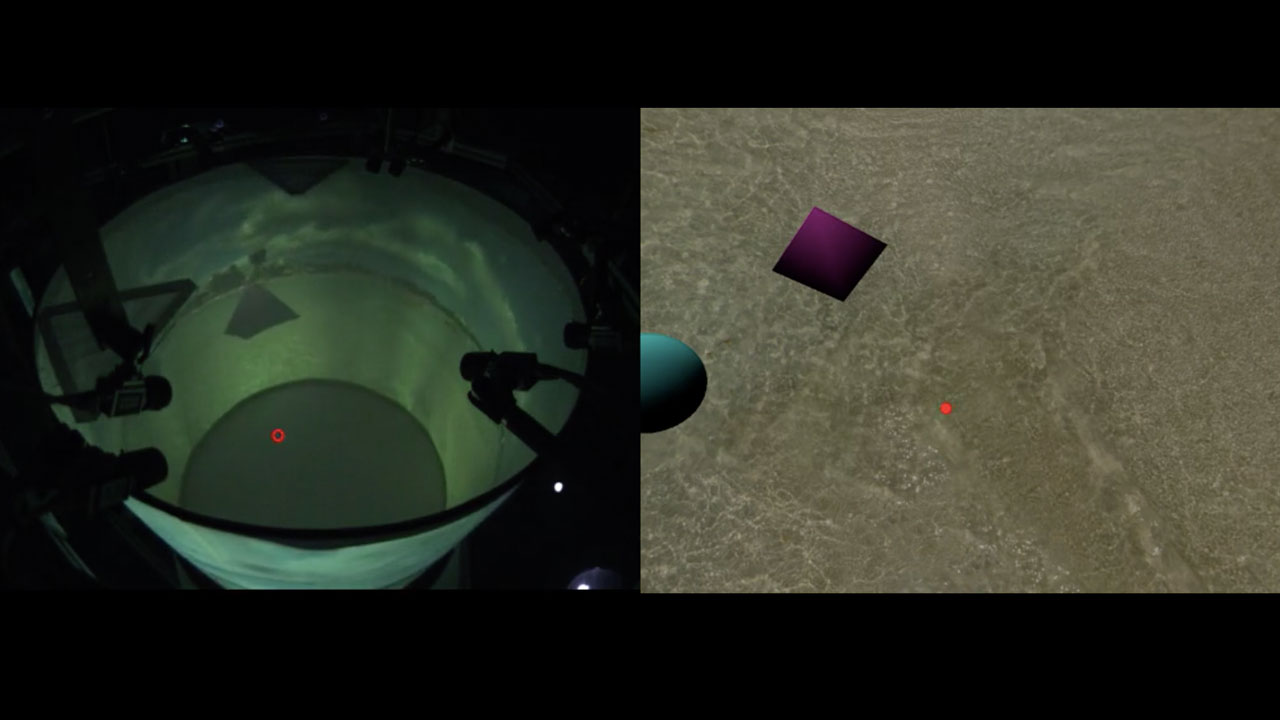

Photo realistic and naturalistic VR for freely flying Drosophila

Photo realistic and naturalistic VR for freely swimming fish

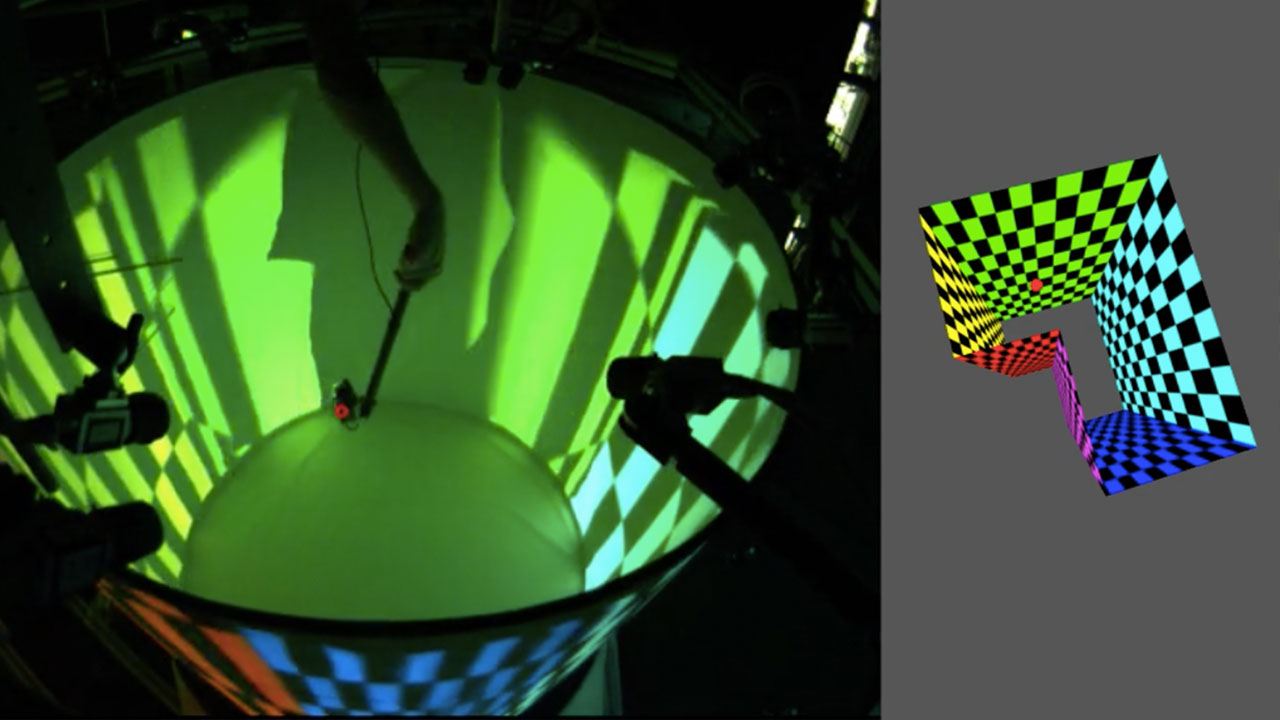

Demonstration of multiple-display perspective correct VR

Demonstration of VR from the perspective of a freely moving observer

We describe a new method, published today in Nature Methods, and show its use in a few model species and with a few experiments. The method is “virtual reality for freely moving animals”. The idea is that a small animal — flies, fish and mice — can be placed in our experimental chamber and the walls of the chamber are computer displays. With a tracking system we locate the exact location of the animals’ eyes and then use this to display on the walls a perspective correct rendering of a virtual world. Essentially, the animal is inside a computer game.

My lab has a science side and a technology side. Scientifically, we want to understand the feedback cycle between activity in neural circuits and behavior. We focus on spatial awareness and spatial cognition in genetic model species such as the fruit fly, Drosophila. We use cutting-edge tools — cameras and tracking systems for measuring animal behavior, genetics tools for precisely modifying the nervous system, and virtual reality to modify the animal's sensory-motor feedback in specific ways. On the technology side, we develop many of the tools that we need for our research, such as markerless animal tracking systems and virtual reality display systems. We release these technologies open source so that this aspect of our work also can help other researchers.

The experiments and their associated images and movies are done in collaboration with Prof. Dr. Kristin Tessmar-Raible from the Max F. Perutz Laboratories, a joint venture of the University of Vienna and Medical University of Vienna, Austria.

(left) Research assistant Abina Boesjes is working with our "FlyCube' VR systems. (right) PhD student Etienne Campione, who normally works with the VR systems, is preparing flies for his experiments.

How does immersive virtual reality help us understand animal vision, perception, cognition and behavior?

The purpose is really to manipulate the feedback that an animal experiences as it moves in its environment. To understand brain and behavior, we need to be able to do this, because feedback is a natural state of affairs for animals and their brains evolved to be feedback controllers. Two cases are especially important. First is the area of spatial cognition. Here, animals (and humans) use every sensation they can get to update their mental map of where they are and how the world is laid out. However, traditional experimental techniques have a hard time letting the animal move freely, and thus get correct sensory proprioceptive or vestibular feedback while also presenting visual stimuli such as distant landmarks. The other case is collective behavior. In this case, it is feedback between animals that has been difficult to control and precisely manipulate experimentally. For both of these cases, I think free moving VR gives researchers a powerful new tool that complements existing techniques, notably restrained animal virtual reality and free behaving experiments without virtual reality.

A virtual elevated maze paradigm for freely moving mice

What do you mean by "freely moving" animals?

The animal moves through an experimental chamber without having any wire, tether, harness. Furthermore, our tracking system works without markers and so the animal also doesn’t have to wear any special devices. This is what lets it work on small animals such as fruit flies and larval zebrafish. (Of course, the VR only works within the arena, so there is a limit about how far the animal can actually move freely.)

Photo realistic and naturalistic VR for freely flying Drosophila

Photo realistic and naturalistic VR for freely flying Drosophila

Tell us more about the VR setup and why is it important to make videos rather than static images?

Videos have always been very important for this work. We need the temporal dimension to understand our experiments. As I mentioned, the essence of this system is to precisely manipulate feedback loops and thus we need time to understand causation. Even for debugging the experiments videos are critically important. We acquire terabytes of video in the lab everyday. Due to live tracking, we can throw most of it away and just record animal position.

Social feedback experiment with real and virtual fish

Can we learn anything about human perception from these studies?

Absolutely. The way that rodents build maps of the world is a good model for humans. For example, John O’Keefe, Edvard Moser and May-Britt Moser won the 2014 Nobel Prize in Physiology or Medicine for their work in this field in rats because of the relevance of their discoveries for humans. They didn’t solve everything, though, and in fact Edvard Moser and Flavio Donato wrote an opinion piece recently about how we need better VR technology. Hopefully they will agree that our system is a useful step.

Zebrafish in 2AFC teleportation experiment

Zebrafish swims among a cloud of 3D dots

About the Straw Lab

The lab of Andrew Straw studies neural circuits and behavior at the University of Freiburg, Germany. By developing advanced technical systems such as virtual reality arenas, they investigate the mechanistic basis of visual behaviors such as navigation in Drosophila.

More info about study at: https://strawlab.org/freemovr

Interview conducted by Alexis Gambis, Executive Director of Imagine Science Films